The NET Open Camera Concept allows users to integrate their algorithms into a wide range of intelligent camera options and adjust them at any time, enabling them to create their own customized vision solutions. NET supports this by providing the basic hardware and the development environment as a fully-functioning system.

With perfectly coordinated vision systems and individually combinable software from existing algorithms, licensable libraries and OpenCV, users can create solutions that are entirely their own. The following article shows what opportunities this presents for users and programmers.

FPGA-supported (pre-)processing in the camera

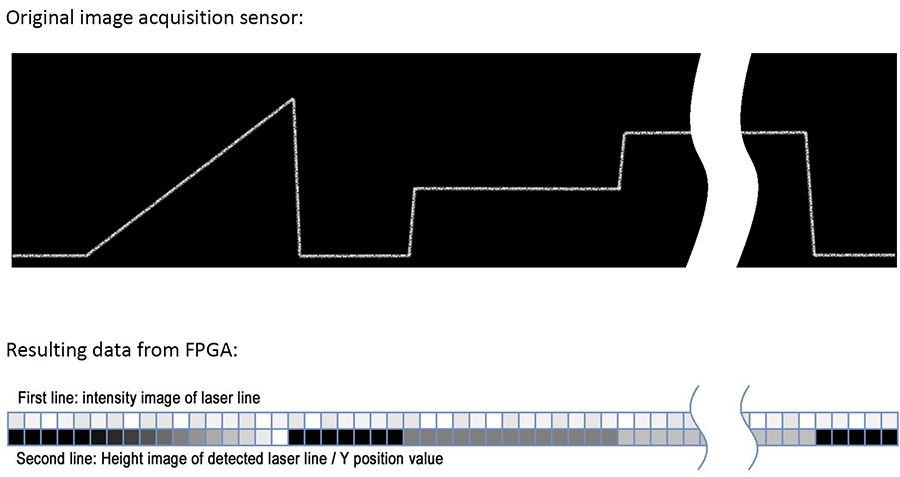

Decentralizing image processing tasks from the host PC to compact cameras on site can significantly speed up image processing tasks for certain applications. For example, NET’s GigE vision camera GigEPro offers a solution that processes algorithms simultaneously in the FPGA, while retaining its compact build. However, the camera is not intended to be used for self-sufficient decentralized image processing, totally independent of any mainframe computer. One example is the camera-integrated 3D laser triangulation, where 1D datasets are obtained from 2D data during a frame analysis. The data reduced in real-time enables significantly larger ROIs to be evaluated and transferred to the host PC. This means, for example, that more web material can be accurately evaluated and finished even faster. The purpose of the Open Camera Concept, which is based on the NET framework, is to enable users to programme their algorithms in the camera-integrated FPGA. NET’s SynView API supplies the dedicated interface (wrapper) for programming the VHDL code. The SynView Explorer offers an easy-to-use GUI that can be used to control all standard GenICam and customized functions. However, the user will need to be familiar with programming FPGAs to be able to freely program the system.

Fig. 1: The GigE vision camera GigEPro processes algorithms simultaneously in the FPGA. This means that, in 3D laser triangulation, 1D datasets can be obtained from 2D data during a frame analysis. The data reduced in real-time enables significantly larger ROIs to be evaluated and transferred to the host PC.

Hybrid decentralized image processing

On the other hand, all image processing functions can be managed decentrally with the smart vision system Corsight. Depending on its configuration, the system even acts as an actuator for controlling the peripherals. The individual functional units can either interoperate with each other or operate independently inline. The system’s integrated processors (Intel Quad Core CPU and FPGA) can be individually fed with algorithms or can be used for libraries such as Halcon, MIL, VisionPro, Zebra Aurora TM Vision Studio or OpenCV. The x86 PC architecture makes it possible for users to create and service applications in their normal working environment (Win10, Linux). Additionally, the system can also be used with line scan sensors as a smart line scan camera. As regards software, NET’s Open Camera Concept can be implemented in the CPU as well as the FPGA. In areas where workflow-based software solutions on the PC are possible, expert knowledge is still required to programme the FPGA. The camera-integrated FPGA can then be accessed through the framework and the system can be used to its full extent.

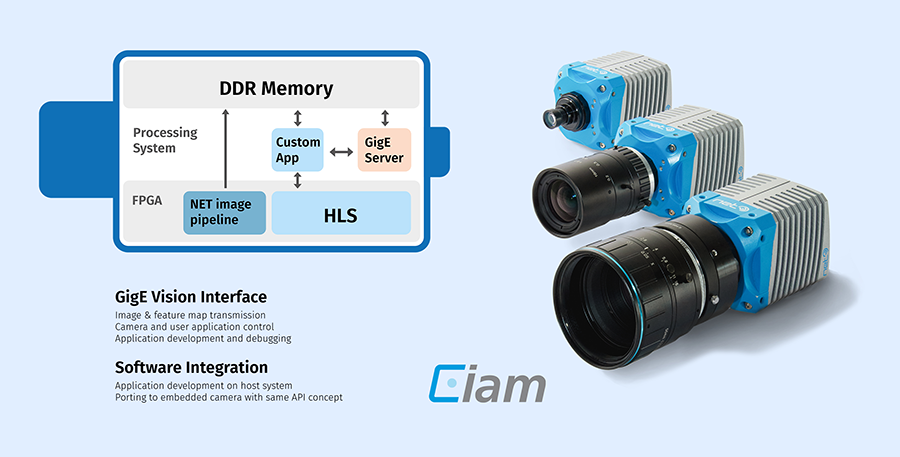

Fig. 2: iam’s processors (ARM CPU and FPGA) can be individually fed with algorithms or can be used for a range of different libraries.

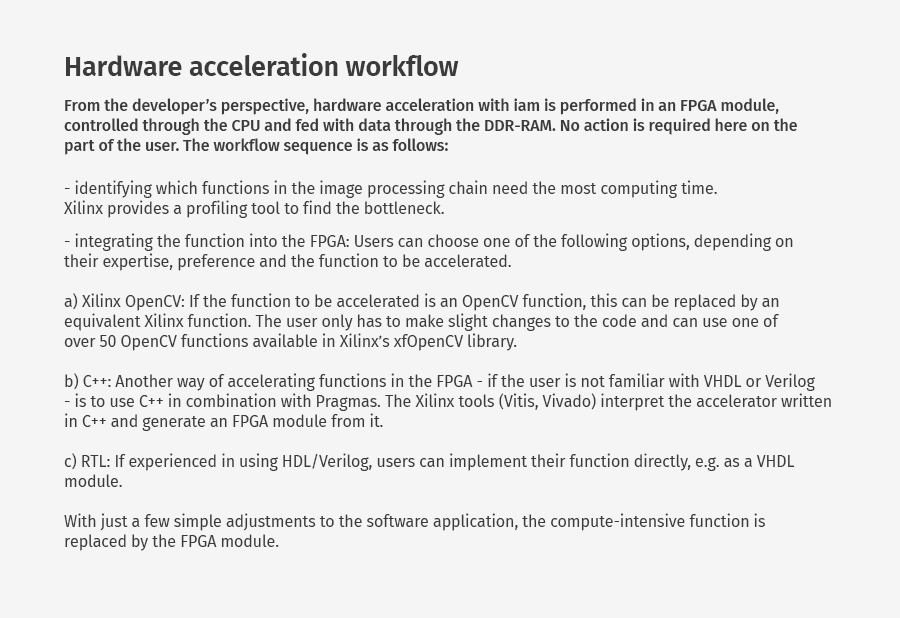

Ease of use with SoC architecture

The smart vision system iam is the perfect choice for self-sufficient processes with customized, flexible software solutions. Due to its various interfaces (DP+, USB, fieldbus), lens mounts (C, M42, F, M12) and optional multi-sensor adaptions, which will be gradually introduced in 2021/22, the system offers a much wider range of functions than Corsight. The difference lies in the way applications are created in the system. Applications that use the FPGA can be created with iam in C and C++. This means that software developers with no VHDL experience can use the system. By using standards such as OpenCL and Vitis for the automatic creation of FPGA binary code and Linux as the operating system, switching from PC-based vision systems to iam is simple. The online community also supports first-time users by offering a range of free tutorials that make working with Xilinx’s Vitis software platform easier. Solution providers can also opt to have the programming done by an experienced system partner.

The system-on-chip processor architecture forms the kernel of iam. Due to the hybrid platform, the hardware acceleration of vision functions between the ARM processor and the FPGA can be performed more efficiently in this system than would be possible for the user to perform manually. The resulting increase in efficiency enables latency-free applications in terms of frame rate. Hardware acceleration takes on greater practical relevance in computations that are more complicated. If, for example, external libraries or KI are used, iam can manage already-completed operations (image matching, blob analysis, color recognition, etc.) more efficiently by means of the SoC-related two-way memory access.

Conclusion

The Open Camera Concept offers even first-time users a high-performance and flexible hardware and software platform, enabling them to successfully master the art of ‘going smart’. Additional benefits are the wide range of sensors and lenses combined with the alternative customizing options. With the hardware-accelerated smart vision system iam, users can achieve maximum performance without having to radically change their work method or their software.